Details of the Presented Systems

Angel is a flexible and powerful parameter server for large-scale machine learning.

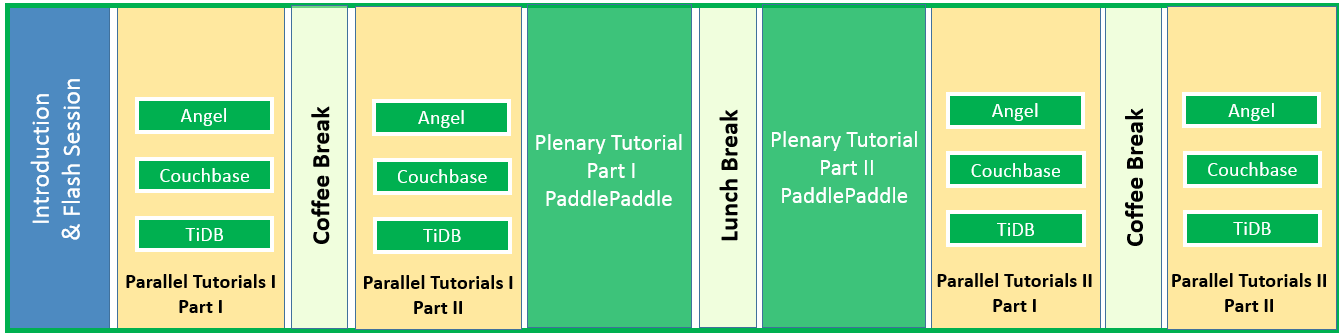

Tutorial Outline

What would be covered.

There are four features in the design of Angel and we will cover them in the tutorial. We list them here.

- The architecture of Angel. Angel is composed of parameter server to manage large models. By partitioning the model, Angel can tackle billions of or even more parameters.

- The service ability. Angel can provide service of parameter server for other systems. Currently, we release Spark-on-Angel to compromise the merits of both Spark and parameter server.

- The design for sparse and high-dimensional data. Sparse and high-dimensional data are pervasive in real applications, especially for recommendation system. Sparse and high-dimensional data can be easily handled in Angel.

- Specific optimization for algorithms. In Angel, we provide a set of machine learning algorithms, such as Latent Dirichlet Allocation, Gradient Boosted Decision Tree and Graph Embedding. These algorithms are all optimized and can scale to terabytes data and billions of parameters.

Besides these features, we will also introduce how to program with Angel.

How to demonstrate and set up the environment for hands-on tutorial.

The running of Angel requires the environment of Hadoop and Spark. Fortunately, since Angel enables local running mode within a single machine, we can demonstrate the environment setting up, compiling, programming and running on one single machine.

How to use the actual system.

Angel is programmed with Java and Scala and compiled by maven. The running of Angel requires Hadoop, HDFS and Spark. To run Angel in distributed environment, we need a cluster managed by Yarn and set up the environment of Hadoop, HDFS and Spark. For testing and demonstrating, we can run Angel in one single machine with Hadoop and Spark.

Who will benefit from this tutorial.

People who want to know machine learning techniques over big data can benefit from this tutorial.

Presenters:

- Lele Yu (Tencent)

- Caihua Wang (Tencent)

Resources:

Applications and APIs speak JSON. Databases speak SQL. Couchbase combines the flexibility of JSON, the power of SQL and deployments at scale.

Couchbase data platform is a database infrastructure to enable modern scalable applications. Today, Couchbase is used by leading communications, consumer electronics, airlines, finance companies to develop and deploy mission-critical applications. See more at this website

Couchbase is a distributed shared-nothing, auto-partitioned, and distributed NoSQL database system that supports JSON model and offers key-value access, N1QL (SQL for JSON) as well as high-performance indexing, text search, and eventing. Its multi-dimensional architecture uniquely helps you to scale-up and scale-out the deployments to match the application scaling requirement . This infrastructure seamlessly supports mobile applications via Couchbase Mobile.

Tutorial Outline

The following topics are covered:

- Couchbase Open Source model and Community edition.

- Couchbase editions (community, enterprise) and CouchDB

- discuss Couchbase architecture and components includes Apache AsterixDB.

- brief use case discussion

- deploy single node and cluster deployments

- hands-on tutorial on Couchbase Server and Couchbase N1QL: SQL for JSON

Intended audience:

This tutorial is designed for database designers, architects and application developers interested in JSON, SQL, Couchbase and NoSQL systems.

Presenters:

- Keshav Murthy (Senior Director, Couchbase R&D)

Resources:

PaddlePaddle (PArallel Distributed Deep LEarning) is an easy-to-use, efficient, flexible and scalable deep learning platform, which is originally developed by Baidu scientists and engineers for the purpose of applying deep learning to many products at Baidu.

Fluid is the latest version of PaddlePaddle, it describes the model for training or inference using the representation of "Program".

PaddlePaddle Elastic Deep Learning (EDL) is a clustering project which leverages PaddlePaddle training jobs to be scalable and fault-tolerant. EDL will greatly boost the parallel distributed training jobs and make good use of cluster computing power.

EDL is based on the full fault-tolerant feature of PaddlePaddle, it uses a Kubernetes controller to manage the cluster training jobs and an auto-scaler to scale the job's computing resources.

Tutorial Outline

Introduction

At the introduction session, we will introduce:

- PaddlePaddle Fluid design overview.

- Fluid Distributed Training.

- Why we develop PaddlePaddle EDL and how we implement it.

Hands-on Tutorial

We have some hands-on tutorials after each introduction session so that all the audience can use PaddlePaddle and ask some questions while using PaddlePaddle:

- Training models using PaddlePaddle Fluid in a Jupyter Notebook (PaddlePaddle Book).

- Launch a Distributed Training Job on your laptop.

- Launch the EDL training job on a Kubernetes cluster.

Intended audience

People who are interested in deep learning system architecture.

Prerequisites

Presenters:

Resources:

TiDB is an open-source distributed scalable Hybrid Transactional and Analytical Processing (HTAP) database. It is designed to provide extremely large horizontal scalability, strong consistency, and high availability. TiDB is MySQL compatible and serves as a one-stop database for both OLTP (Online Transactional Processing) and OLAP (Online Analytical Processing) workloads, while minimizing extract, transform, and load (ETL) processes which are difficult and tedious to maintain.

This tutorial will explain the motivation, architecture, and inner-workings of the TiDB platform, which contains three main components:

- TiDB: stateless MySQL-compatible SQL layer

- TiKV: distributed transactional key-value storage layer

- TiSpark: Spark plug-in that reads data directing from TiKV

Since its 1.0 release in October 2017 and 2.0 release in April 2018, TiDB has been in production in over 200 companies. It was recently recognized in a report by 451 Research as an open source, modular NewSQL database that can be deployed to handle both operational and analytical workloads, fulfilling the promise and benefits of an HTAP architecture.

Tutorial Outline

This tutorial will cover the following topics:

- Introduction to TiDB’s architecture, technology, and design choices

- In-production use case analysis

- How to deploy TiDB on a laptop using Docker-Compose

- Use a local MySQL client and TiSpark instance to read/write data into TiDB

- Use MySQL/TiSpark to modify and analyze “fresh” data

- Display TiDB cluster state and monitor metrics with Grafana

- How to deploy a TiDB cluster using on AWS

- Demonstrate increasing and decreasing TiKV nodes to elastically scale system capacity

Intended audience:

This tutorial is designed for the database engineers and academic researchers, who are interested in how a next-generation NewSQL database like TiDB is built and deployed, or want to know how TiDB enables near real-time analytics from live transactional data.

Presenters:

- Ed Huang (co-founder and CTO of PingCAP and Chief Architect of TiDB)

Resources: